|

The Blur-Noise Trade Off Dataset

Introduction This dataset was used to validate the restoration performance models for image deblurring presented in [Boracchi and Foi 2012]. Image Acquisition Settings

Images have been acquired by fixing a Canon EOS 400D DSLR camera on a tripod, placed in front of a monitor running a movie where a natural image was progressively translated along a specific trajectory. The camera was accurately positioned to ensure parallelism between the monitor and the imaging sensor (we carefully checked that in each picture the window displaying the movie was rectangular) and images were acquired while the movie was playing. The resulting blur can be rightly treated as spatially invariant (convolutional).

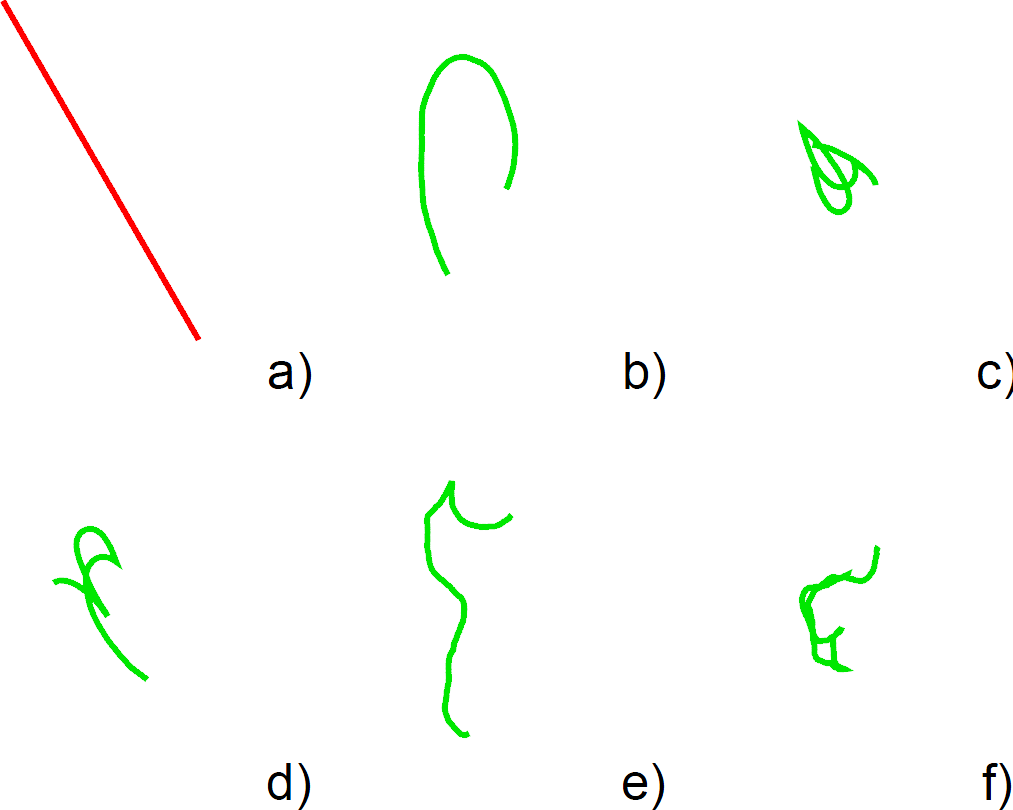

Figure 1: Trajectories used to generate motion-blur PSFs in [Boracchi and Foi 2012]. The trajectory (a) corresponds to uniform (rectilinear) motion, while the remaining (b - f) represent blur due to camera-shake.

Figure 2: Original Images (Balloons, Liza, Jeep, Salamander) |

||||||||||||||||||||||||||||||||||||||||||||||||

|

For each trajectory/image pair we rendered a 3-second movie and, from each movie, we acquired 30 pictures using the settings 1 - 10 shown below:

|

||||||||||||||||||||||||||||||||||||||||||||||||

|

For each movie we also acquired a ground-truth image (G.T.), which corresponds to a 4-second exposure with ISO 100 taken from the still video. The G.T. image was exclusively used to compute the restoration error and to estimate the PSFs from blurred images. We cropped observations of 256x256 pixels from different channels of the Bayer pattern and we estimate the PSFs via parametric fitting through the minimization of the RMSE of the restored image (since we do not consider blind deblurring scenario we levereged the knowledge of the continuous trajectory generating the motion blur). The camera-raw dataset contains 1954 images acquired from 74 movies, of which 285 (from 13 movies) corrupted by uniform-motion blur (i.e., trajID = 'a'). The noise parameters a and b have been estimated using the algorithm in [Foi et al. 2008], and have been also reported in the dataset. This experimental setup is analogous to the one in [Boracchi and Foi 2011], where raw images corrupted by uniform-motion blur were deconvolved. This dataset contains uniform motion blur along a single direction since, provided a PSF estimate, any deblurring algorithm would achieve essentially the same restoration quality when the blur direction varies, as discussed in [Boracchi and Foi 2011]. DataSet Description

The dataset is composed of 4 Matlab files, each containing a cell array img. Each file stores the observations from the same original image (Figure 2) and each cell contains a structure array that refers to a specific movie and color plane: the elements in the array are up to 30 (images where the PSF estimation was not succesfull has been discarded).

DataSet Download |

||||||||||||||||||||||||||||||||||||||||||||||||

|

References [Boracchi and Foi, 2012] Modeling the Performance of Image Restoration from Motion Blur

[Boracchi and Foi, 2011] Uniform motion blur in Poissonian noise: blur/noise trade-off

[Foi et al., 2008] Practical Poissonian-Gaussian noise modeling and fitting for single image raw-data

|

||||||||||||||||||||||||||||||||||||||||||||||||